This post was published in 2021-10-12. Obviously, expired content is less useful to users if it has already pasted its expiration date.

主要内容:

linear regression (with regularization)

linear regression -> model selection

矩阵的满秩 🔗 [一个矩阵列满秩意味着什么,能全面总结吗? - 知乎] https://www.zhihu.com/question/270393340

多重共线性(Multicollinearity)🔗 [多重共线性 - 维基百科,自由的百科全书] https://zh.wikipedia.org/zh-hans/%E5%A4%9A%E9%87%8D%E5%85%B1%E7%BA%BF%E6%80%A7

简单介绍线性回归正则化:🔗 [线性回归中的正则化 - 知乎] https://zhuanlan.zhihu.com/p/62457875

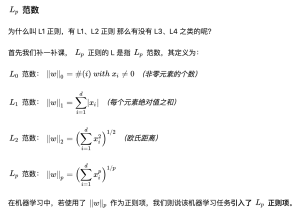

[mathjax]L_p[/mathjax]范数

见:🔗 [ML 入门:归一化、标准化和正则化 - 知乎] https://zhuanlan.zhihu.com/p/29957294

为什么不直接用解析解[mathjax]\mathbf{w}=\left(\lambda \mathbf{I}+\boldsymbol{\Phi}^{\mathrm{T}} \boldsymbol{\Phi}\right)^{-1} \boldsymbol{\Phi}^{\mathrm{T}} \mathbf{t}[/mathjax]: 🔗 [线性回归有精确的解析解为什么还要用梯度下降得到数值解?-SofaSofa] http://sofasofa.io/forum_main_post.php?postid=1002094

其他情形:🔗 [神经网络中,为何不直接对损失函数求偏导后令其等于零,求出最优权重,而要使用梯度下降法(迭代)计算权重? - 知乎] https://www.zhihu.com/question/267021131

Different regularization parameters in linear regression:有用的链接(含代码):

🔗 [Least Squares Regression in Python — Python Numerical Methods] https://pythonnumericalmethods.berkeley.edu/notebooks/chapter16.04-Least-Squares-Regression-in-Python.html

🔗 [Python线性回归算法【解析解,sklearn机器学习库】] https://www.shuzhiduo.com/A/D854QNLWdE/

Different regularization parameters in linear regression:解释原因

The regularization parameter (lambda) is an input to your model so what you probably want to know is how do you select the value of lambda. The regularization parameter reduces overfitting, which reduces the variance of your estimated regression parameters; however, it does this at the expense of adding bias to your estimate. Increasing lambda results in less overfitting but also greater bias. So the real question is "How much bias are you willing to tolerate in your estimate?"

https://stackoverflow.com/a/12182410

贝叶斯线性回归

(空白的内容)